How the New York Times A/B tests their headlines

Part 1 of a series on the New York Times, in which I take a close look at how (and when) the New York Times tests multiple headlines for a single article.

The New York Times is a big deal. As they tell their advertisers, the NYT is the #1 news source for young, rich thought leaders:

And yet it rarely attracts the kind of close scrutiny of, say, a Fox News. And that’s totally reasonable! Fox News is an absurd clown show and deserves every criticism it gets.

But I’m still curious about the NYT. It paints a certain picture of the world—and (in my circles) this ends up being the default picture, whether or not you agree with it.

I wanted to know more about this picture. So for the next few weeks I’ll be publishing a series of posts on the New York Times, drawing on data scraped from their front page and pulled from their official API.

This first post is all about A/B testing: how the NYT tests different headlines and how they change over time.

A/B Testing at the New York Times

Okay look it’s 2021—it would be shocking if the New York Times wasn’t A/B testing headlines.

And the NYT is pretty open about it:

The Times also makes a practice of running what are called A/B tests on the digital headlines that appear on its homepage: Half of readers will see one headline, and the other half will see an alternative headline, for about half an hour. At the end of the test, The Times will use the headline that attracted more readers.

But I still had questions:

How many articles are A/B tested?

How many headlines are tested for each article?

Does it help?

How different are the headlines?

Methodology

I wrote a script that does the following:

Scrapes the NYT homepage

Pulls out all the headlines

Associates them with article metadata from the official NYT API

Shoves them into a database

The script runs every five minutes. I started doing this on February 13, 2021, so what follows is based on three weeks of data.

Results

As promised, the NYT does in fact A/B test their headlines. Roughly 29% of articles have multiple headlines, and the most headlines observed for a single article (so far) is eight.

A lot of these headline changes are pretty minor—it’s common for the NYT to fix a capitalization or punctuation error after an article has been published.

And sometimes it seems like the copy editors can’t quite make up their minds: should “in” be capitalized?

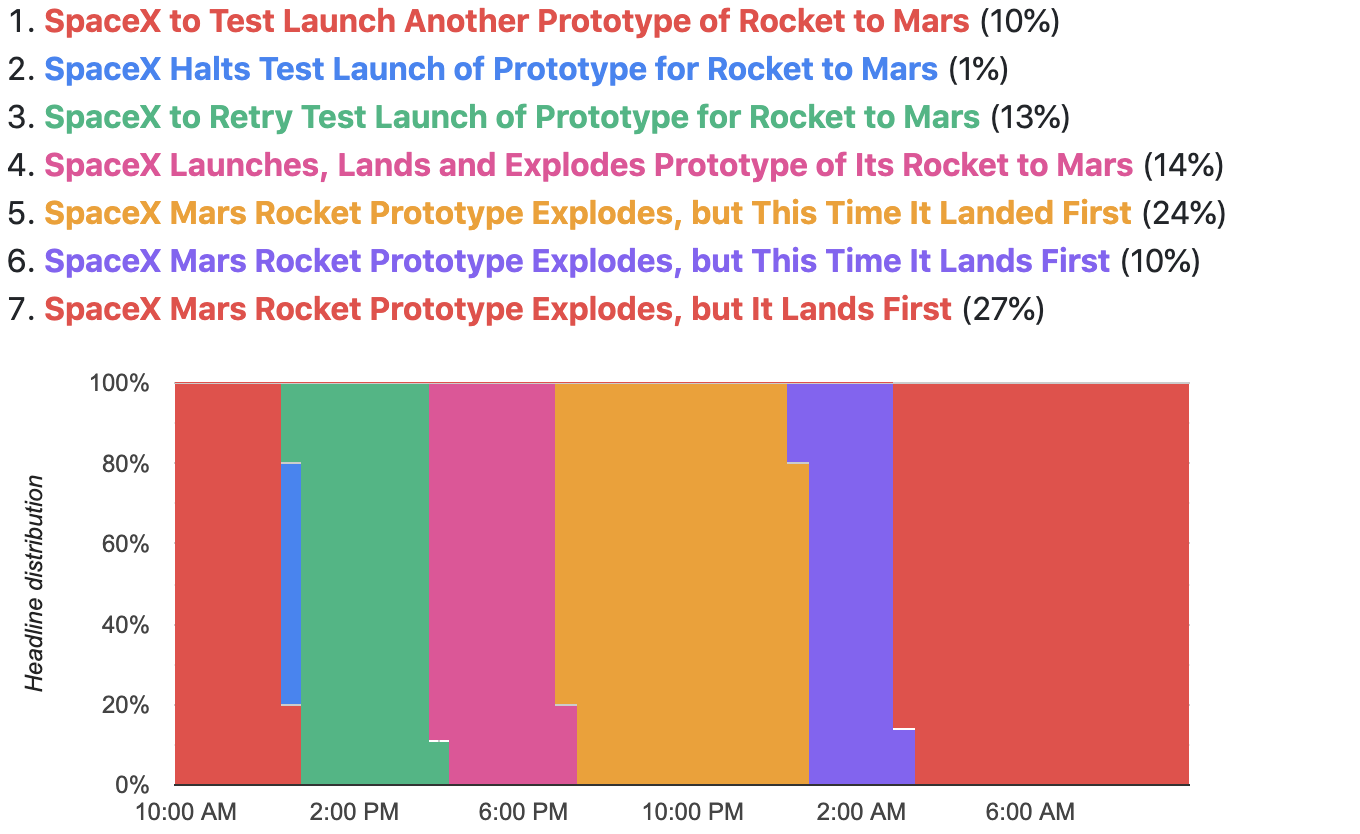

Other times, the NYT changes headlines as the story evolves. Here’s a great story told in headlines:

But most headline swaps are clearly A/B tests looking for more clicks. Here’s an article about Biden’s governing style, with a pretty dramatic headline switch:

The only reason to make this kind of change is if you’re trying to boost engagement. And it worked! This article broke into the “most viewed” list a few hours after the headline swap (which supports my theory that liberals love reading about Trump).

Note: The above chart (and others in this post) make it look like a headline switches completely from one to another, without any actual A/B testing. This is just an artifact of how I’ve grouped the columns—each bar represents half an hour, but within that half my scraper sees the headline change back and forth many times, despite the colors being grouped together.

But not all A/B tests have such success. Here’s an A/B test that definitely failed (you might have to squint to see the tiny blue smidge on a smaller screen):

I hope this failure didn’t discourage the kooky NYT editor behind “Jumping Jehoshaphat!” The NYT could definitely use more Bugs Bunny-isms.

But overall there’s a pattern to these A/B tests: headlines tend to get more dramatic as time passes. Take this article about Cuomo’s sex scandal:

In the first swap, Cuomo goes from being attacked to being under siege, and in the second swap he’s no longer revising his plan, he’s apologizing.

And it worked: as the headline changes we can see this article climbing the “most viewed” chart.

The headlines get even spicier in this article about Trump’s CPAC address:

Trump starts off addressing conservatives and claiming leadership of G.O.P. but in the final headline Trump has a hit list and is firing a warning shot. And sure enough, the bombastic rhetoric propels this article onto the “most viewed” list.

Okay last example: this wildly popular article about Oprah’s interview with Meghan Markle:

I actually watched this interview—all two hours of it—and I can tell you that the first two headlines are a much better summary of what went down. Yes, Meghan does reveal that she contemplated suicide, but it’s a five-minute interlude in an interview that has a lot more going on. For example, none of these headlines mention the role that racism played in Meghan’s distress—a subject that takes up way more screen time than her suicidal thoughts.

Does it work?

The articles above got way more popular after some A/B testing—but is this true in general?

I crunched the numbers, and it turns out that A/B-tested NYT articles are 80% more likely to rank on a “most popular” list. And—not surprisingly—more headline testing correlates with more engagement:

Caveat: headline count and engagement are correlated but who knows which way the causation runs. It makes sense to me that the more you A/B test a headline, the more likely it is for your article to get shared/liked/clicked. But it’s also possible that the NYT spends more time tweaking articles that are already popular. (Although, anecdotally, many of the articles I’ve looked at have lots of headline swaps before the article lands on a most-X chart.)

So what did I learn?

The NYT tests headlines to increase engagement

Duh.

Overall, this testing is pretty restrained

I’m pretty surprised how few headlines get tested by the NYT. Most articles aren’t A/B tested, and most of the ones that are A/B tested have just two headlines.

I’d sorta assumed that the NYT editors told their staff to submit all drafts with, like, six possible headlines, and that some automated system would test all six in the first hour. But clearly this isn’t true—despite the fact that the data indicates more A/B testing would boost engagement.

One possible explanation: 62% of the NYT’s revenue comes from subscriptions, and only 27% comes from advertising (and ad revenue is falling year over year). This means that views aren’t as important as subscriptions—and a front page full of clickbait would likely scare off potential subscribers.

But it still leads to emotionally-charged headlines

The NYT might be more restrained than BuzzFeed, but we should keep in mind that it’s not a neutral observer. As the examples above show, A/B tested headlines paint a picture that’s a lot more dramatic than the reality. Frequent NYT readers will end up thinking the world is scarier and more shocking than it really is.

Next time

In the next post I’ll look at the front page of the NYT, specifically:

How long articles stay on the front page

Which articles spend the most (and least) time on the front page

What kind of content is most likely (and least likely) to appear on the front page

How front-page time correlates with overall engagement

And other fun tidbits!

The data

If my free-tier EC2 instance hasn’t fallen over you can browse the live headline data yourself:

Keep in mind:

All data is from February 13, 2021 onward

The site is cached for 30 minutes, so it might be slightly stale

Let me know if you find something interesting!