Forcing AI to answer medical questions accurately

Using a pile of Javascript to make GPT-3.5 do something useful.

People are excited about AI

Have you heard? Artificial intelligence is here at last—even the New York Times is writing about it!

There are lots of AI models floating around, but the one that really broke the internet is called ChatGPT, created by OpenAI. What is ChatGPT, exactly?

Let's ask!

🙏 Praise be to ChatGPT!

But what if it was actually useful

I'm very grateful that OpenAI spent millions on R&D to make Twitter more entertaining. But what if we could use AI to solve actual problems?

For example: I know some people who have COVID-related hair loss. These people desperately want to know when their hair will stop falling out. Seems like something this Gift of the Lord—trained on the sum of human knowledge1—would know.

Well that's annoying!

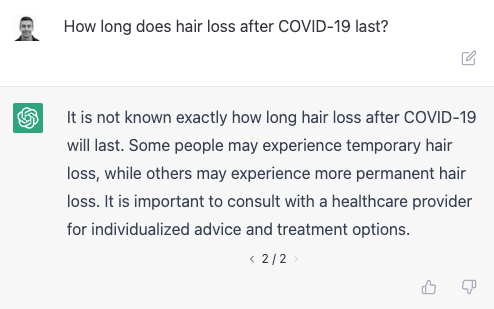

Undeterred, I submitted exactly the same question four seconds later, and—surprisingly!—got a totally different answer:

Well, that's a lot of words that basically boil down to "ask your doctor." But even if ChatGPT did give a concrete answer on the second go-round, how could I trust it? It just told me two totally different things!

AI has some flaws

So already I've encountered some shortcomings of our AI2 overlords:

Their knowledge is out of date

Their knowledge is inconsistent

They give vague, unhelpful answers

They hallucinate (i.e., they give answers to questions that they don't have knowledge about)

But what if they didn't do that

There's probably a really fancy way to address these problems using abstruse mathematics, a pile of arXiv preprints, and LaTeX.

But what if instead I just wrote a bunch of bad Javascript? Here's what I'm thinking:

Pull recent/relevant studies from Semantic Scholar

Rank them

Pull out relevant snippets

Summarize

I'd still use the fancy AI (GPT-3.5, a cousin of ChatGPT) for steps 2-4, but I'd only ask it to perform very specific tasks on a predefined set of studies. And I'd tell it very sternly not to hallucinate!

Loading your answer…

Okay so obviously the only reason I’m writing this post is because I did those things. I’ll skip over the boring bits (the Javascript would make your eyes bleed) and jump right to the exciting demo-GIF:

(Look I might not know what a tensor is but damn that’s a nice loading screen. 🙌)

(Yes I’m aware that ChatGPT will soon be designing better ones. 😭)

Bingo!

Wow! That went way better than expected.

But can I trust it?

Look, I don't really trust these polite AI chatbots, and I certainly don't trust polite AI chatbots with a thick layer of untested Javascript plastered on top.

But the cool thing is that I don't really need to trust them. Unlike the other "text in, text out" AIs, this one actually has an audit log. You can see exactly what excerpts were used to generate the answer and what studies those excerpts came from.

But…Google?

Yeah okay sure—you could just Google this question. But

You wouldn’t get to tell your friends you used ✨ArTiFiCiAl InTeLiGeNcE✨

You (once again) wouldn’t know where the answers came from. Most of my Google searches turn up (heavily SEO-optimized) editorial-style articles with zero sources listed. But my Javascript-AI Frankenstein thing gives answers that can always be traced back to primary research.

Can I try it?

Sure, do your worst! (Until my OpenAI credits run out.)

Who are you? What is this site?

I'm Tom Cleveland, and I'm interested in giving people access to better health information. I've been trying to do this in various ways over the last five years, and GlacierMD.com is where the prototypes go.

Sound like something you're interested in? Subscribe to this newsletter and receive…monthly?…updates about this project.

Thanks for reading!

I’m only talking about GPT-style LLMs in this post—there are a ton of other AI architectures out there (with different tradeoffs) but I’m not an expert and OpenAI has a really nice API.